Getting Started

stellar-etl-airflow GitHub repository

GCP Account Setup

The Stellar Development Foundation runs Hubble in GCP using Composer and BigQuery. To follow the same deployment you will need to have access to GCP project. Instructions can be found in the Get Started documentation from Google.

Note: BigQuery and Composer should be available by default. If they are not you can find instructions for enabling them in the BigQuery or Composer Google documentation.

Create GCP Composer Instance to Run Airflow

Instructions on bringing up a GCP Composer instance to run Hubble can be found in the Installation and Setup section in the stellar-etl-airflow repository.

Hardware requirements can be very different depending on the Stellar network data you require. The default GCP settings may be higher/lower than actually required.

Configuring GCP Composer Airflow

There are two things required for the configuration and setup of GCP Composer Airflow:

- Upload DAGs to the Composer Airflow Bucket

- Configure the Airflow variables for your GCP setup

For more detailed instructions please see the stellar-etl-airflow Installation and Setup documentation.

Uploading DAGs

Within the stellar-etl-airflow repo there is an upload_static_to_gcs.sh shell script that will upload all the DAGs and schemas into your Composer Airflow bucket.

This can also be done using the gcloud CLI or console and manually selecting the dags and schemas you wish to upload.

Configuring Airflow Variables

Please see the Airflow Variables Explanation documentation for more information about what should and needs to be configured.

Running the DAGs

To run a DAG all you have to do is toggle the DAG on/off as seen below

More information about each DAG can be found in the DAG Diagrams documentation.

Available DAGs

More information can be found here

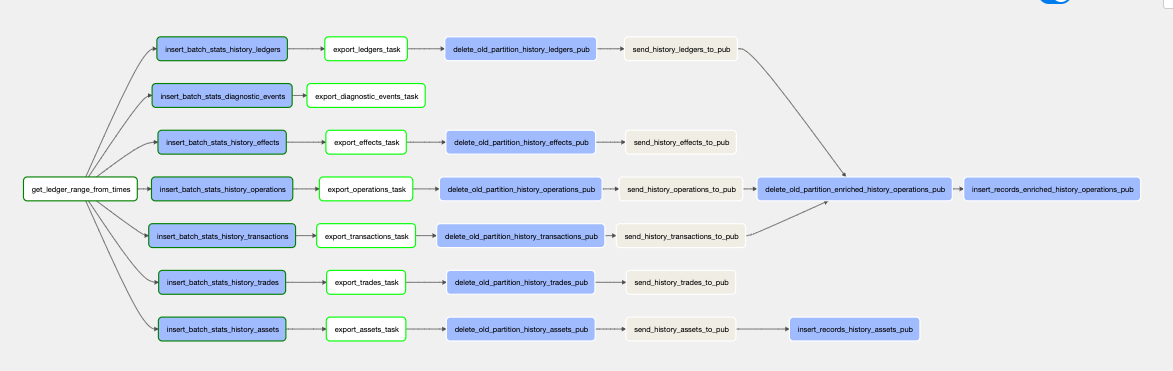

History Table Export DAG

- Exports part of sources: ledgers, operations, transactions, trades, effects and assets from Stellar using the data lake of LedgerCloseMeta files

- Optionally this can ingest data using captive-core but that is not ideal nor recommended for usage with Airflow

- Inserts into BigQuery

State Table Export DAG

- Exports accounts, account_signers, offers, claimable_balances, liquidity pools, trustlines, contract_data, contract_code, config_settings and ttl.

- Inserts into BigQuery

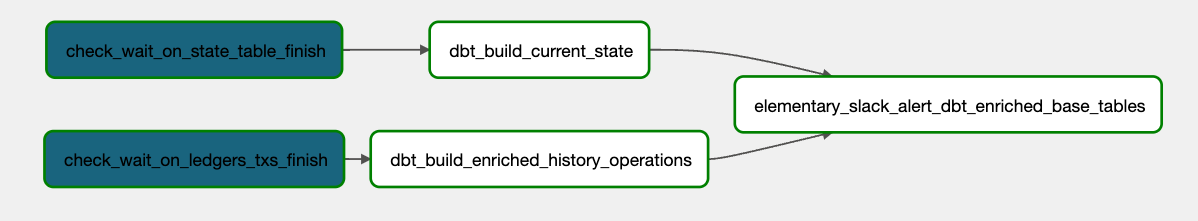

DBT Enriched Base Tables DAG

- Creates the DBT staging views for models

- Updates the enriched_history_operations table

- Updates the current state tables

- (Optional) warnings and errors are sent to slack.